GSD

React SEO: How to Build Search-Friendly Pages in React

Posted by Rafael Quintanilha on October 3, 2023

Digital advertising is a multi-billionaire industry that has been rising sharply. In fact, the money spent worldwide on it has nearly doubled since 2016. The motive for this is clear: companies want to be seen on the Internet.

On the one hand, you can pay a large number of dollars to have your ad featured, while on the other hand, you can be seen for free. That’s due to SEO, or Search Engine Optimization. Attracting organic traffic to your website is one of the most profitable actions a business can take, and this is by no means a trivial thing to achieve.

In this article, I’ll guide you on how to build SEO-friendly pages using React, the most popular framework for building modern websites. I'll also show you how these methods can be applied to dynamic content with the help of a good React CMS.

Table of contents

The Importance of SEO

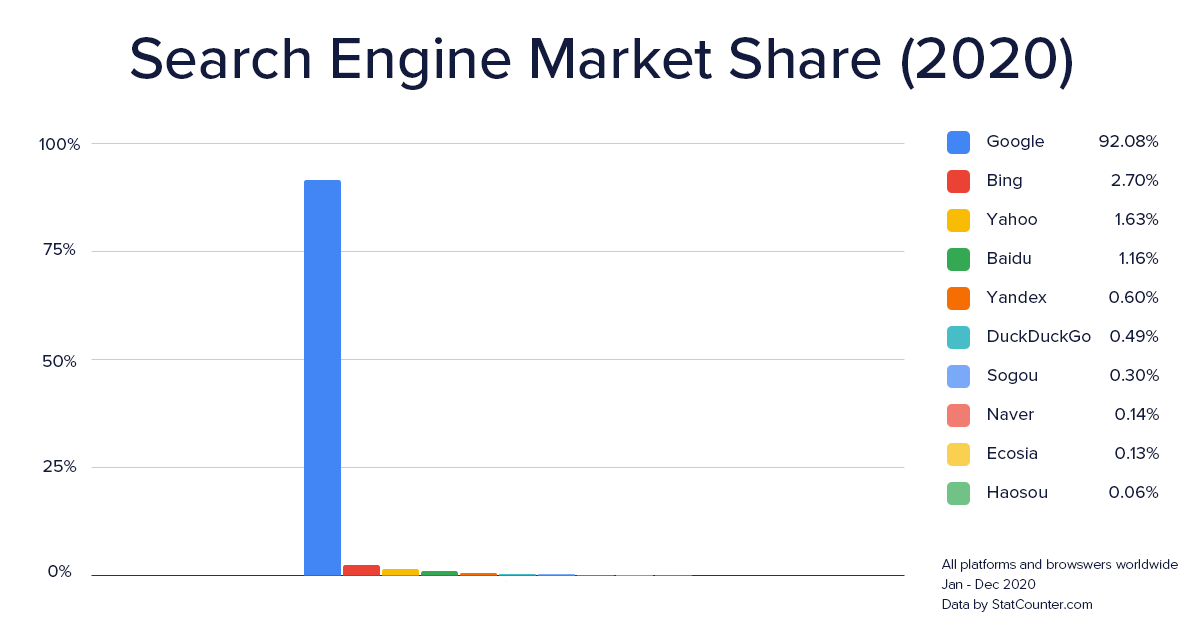

Google owns 92% of the search engine market share worldwide and this number has been fairly steady since 2009. It makes sense, then, to target Google and what it considers good practice when developing your SEO strategy.

The core concept is that the higher your website ranks on the Search Results Page (SERP), the higher the likelihood users will click on it. While this is fairly intuitive, what might surprise you is how large the gap is in click-through rates (CTR) in the first positions — recent studies have found that the combined click-through rates for positions 1 and 2 on a SERP are approximately double the combined click-through rates for positions 3 and 4! In other words, your effectiveness in being seen is exponentially affected by how good you fare on the results page.

How Google Ranks Your Website

Before we learn how to enhance React SEO, we need to understand how the whole process works. In simple terms, Google uses a bot called Googlebot (how creative!) to crawl your application. This robot is called a web crawler.

The crawler will load your application, analyze it, and rank it by a set number of criteria. While exactly how each criterion is evaluated is unknown, one should target as many features as possible. An application optimized for SEO will enforce some rules to increase the likelihood of being highly ranked.

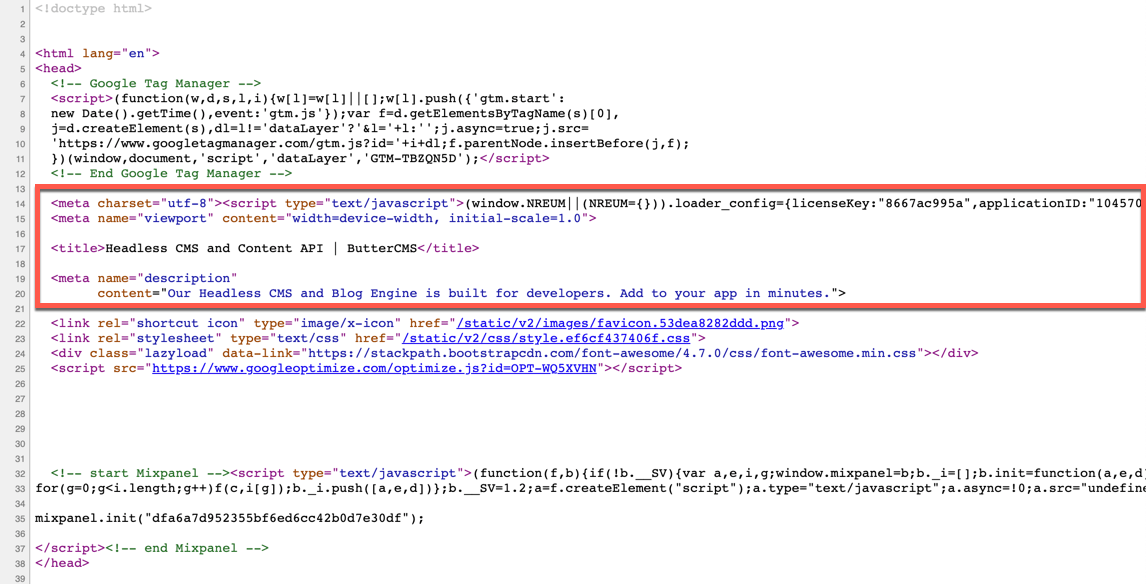

Meta Tags

One of the most critical aspects of an SEO-friendly application is to use the correct meta tags. Meta tags live in the header of your application and describe what that page is for the web crawler. It is then essential that every page on your website have meta tags that are as accurate as possible. This is one of the shortcomings of a barebones React application and we will understand how to overcome this problem later.

Crawling Budget

We live in an era of limited resources, and this will be always true. The same concept applies to search engines and their crawlers — they need to spend their time wisely. The result of this policy is what is called a crawling budget, or how much time a crawler will spend analyzing your website. As you might guess, how efficient Google will parse your application is severely impacted by your app speed. Any effort to improve load time is then welcome, and this can become a problem for React apps.

Accessible URLs

It is imperative for React SEO to have an SEO-friendly URL. In Google’s words: “URLs with words that are relevant to your site's content and structure are friendlier for visitors navigating your site.” The full set of best practices can be seen here. This factor turns out to be one of the major problems with Single Page Applications (SPA), such as regular React applications. Once SPAs are evaluated on the client-side, the whole application can live within a single URL.

There are several other factors that affect React SEO. However, the above are the most flagrant shortcomings of a React application and will be the object of discussion for the next sections.

React SEO: The Problem with React Applications

So far we have learned that search engines use robots called crawlers to parse your website and rank them accordingly in their SERP. Unfortunately, several of the aspects that are valuable to search engines are not found in standard React applications. We will now analyze what exactly React’s limitations are and, later on, learn how we can overcome them.

Static vs Dynamic Sites

Back in the day, all web pages were called static. The process is as follows: the user makes a request to a server for a given URL. The server then returns a set of HTML, JavaScript, and CSS files that are served on-demand and rendered on the user’s browser.

This old-fashioned process is ideal for robots — they have all assets for that web page when they access it. However, things changed and web applications became more complex. The content is many times dynamic, meaning that it changes constantly. Think about your Twitter feed: all content there is in constant motion, even though the URL remains the same.

Dynamic content is a problem for crawlers once the data might not be available during their crawling time (remember the crawling budget?). Also, it is loaded via JavaScript, which means that the browser needs to render and parse the code before anyone is able to see anything. This increases substantially the time a crawler needs to parse the content, hence affecting your SEO.

How SPAs Work

As mentioned before, React is a Single Page Application. This means that your whole app lives on a single page, even though you can simulate different routes and pages.

While SPAs are very convenient for dynamic applications, they lack good support for SEO. For starters, if all you have is a single page, your meta tags will hardly describe accurately each part of your application. Moreover, you won’t be able to generate unique URLs out-of-the-box, having to resort to a client-side routing library like react-router.

And finally, an SPA is a single webpage where the JavaScript is responsible for updating the content on the screen. That usually means that the JavaScript code is bulky, which leads to a higher parse time by the browser. Ultimately, your application will load slower (due to the time needed to download + parse a larger JavaScript file) and your crawling budget will run out quickly.

It all looks like React applications are doomed to rot on the dark corners of the SERP, but it doesn’t need to be that way. Next, we will learn what we can do to overcome the aforementioned issues.

Server-Side Rendering

Server-side rendering (SSR), also known as isomorphic applications, is a clever solution to the SPAs shortcomings.

In order to avoid the single page with bulky JavaScript, the React community evolved to a model where the application is rendered on the server during build time. That means that instead of sending a large JavaScript file to the client and letting it figure out what to render, a compiler will analyze the code on the server and generate multiple pages. These pages will then be served on their specific routes, in the good ol’ static website model.

The advantage of such a model is that you can keep working with a cutting-edge library such as React and still retain the biggest advantage of static websites — blazing fast load times!

However, it is a product decision to understand if SSR is ideal for your project. As a rule of thumb, if your application has content that changes too often, client-side applications should be the best option. Now, if your content is mostly static, and you have many web pages that would benefit from being found on a search engine (such as a product landing page or a blog post), SSR is the way to go.

Implementing an SSR Application in React

The most famous frameworks for developing SSR applications are Gatsby and Next.js. Although there are differences between them, their main goal is similar: to allow next-generation web applications to remain blazing-fast.

It is beyond the scope of this article to compare both technologies, but I will use Gatsby as an example of how the framework addresses the React SEO dilemma.

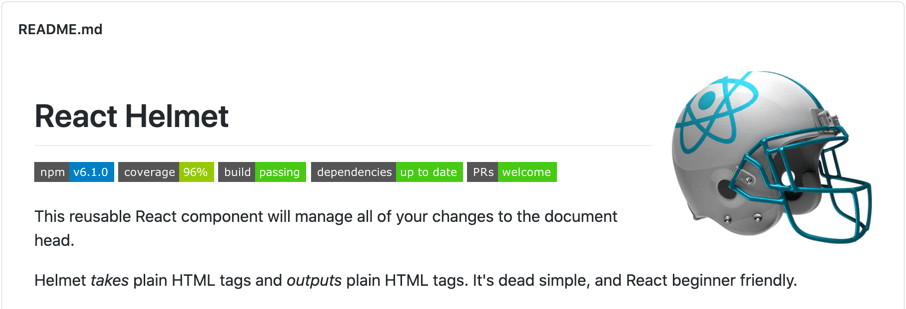

React-helmet

React-helmet is the industry standard for meta tags optimization. It allows every page to have its own custom meta tags, what we have seen is a major victory for React SEO. The library also allows for the addition of Open Graph, which directly impacts the shareability of your content on potentially highly viral spaces such as social networks.

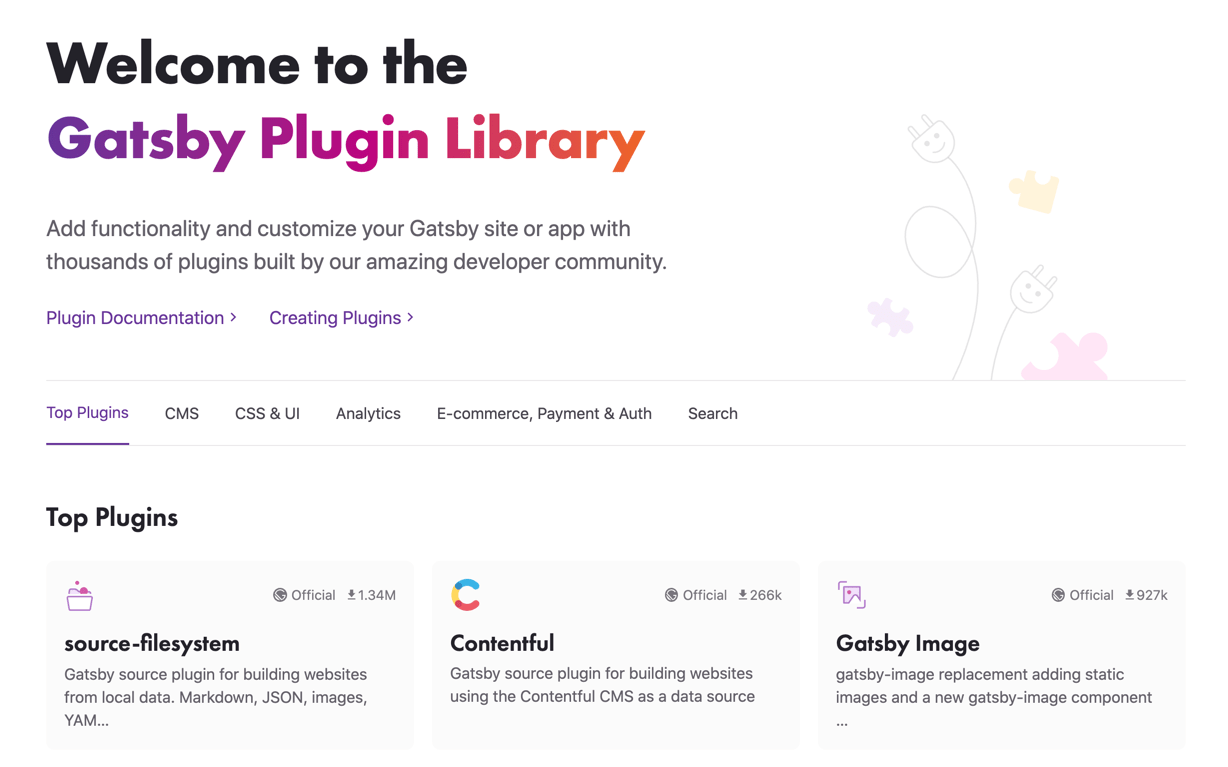

Fortunately, Gatsby provides a wide set of plugins that can be used to simplify your life as a developer. The gatsby-plugin-react-helmet repository is one of the most used and widely maintained plugins in the ecosystem.

If you haven’t tried it already, this comprehensive tutorial will help you get up on your feet with Gatsby and react-helmet.

Gatsby-image

We have repeatedly insisted on how harmful to your React SEO score large JavaScript files are. However, I have never found a potential performance threat as strong as images.

Developers often optimize too much of what is in their control — dependencies, code splitting, etc. But what many overlook is that a large image loaded in your website can severely impact its performance. While code is under control, static assets are often not. They are provided by content creators, marketers, authors, and others. Therefore, we need a plan to mitigate the potential impacts.

My favorite solution for this problem is gatsby-image, another plugin meant to save your life. Gatsby-image takes care of, among several other things, optimizing your images and serving them in the most efficient and responsive format. It enhances the look and feel of your app, not to mention the performance and ultimate SEO gain.

Other Plugins

Gatsby has a vast library of plugins. Many of them are zero-config, meaning that you gain React SEO without much more effort than an npm install. As an example, gatsby-plugin-sitemap will generate sitemaps for your web page, helping bots to crawl your content in the right way.

Route-based Code Splitting

Another great feature of Gatsby is the ability to serve static pages in their own URL (thus addressing the Accessible URLs point) and be wise about what should be included in that particular page.

Remember how SPAs are penalized by having large chunks of JavaScript being loaded at all times? With route-based code splitting, the only JavaScript code sent from the server to the client will be necessary to render whatever is on that particular page. The following article describes in detail how it works.

Do I Need a Static Site Generator?

By all means, every advantage listed on the previous topics can be achieved with vanilla JavaScript and other tools. However, SSGs like Gatsby and Next.js will abstract the hassle from you to the point that the standard Gatsby application is born with a 100 SEO score on Lighthouse, Google’s performance analyzer.

More than recommending framework A or B, the goal of this post is to outline the importance of SEO, the shortcomings of barebone React applications, and which tools can be used to overcome them. Don’t feel pressured to switch and adopt a new technology tomorrow — but be aware that different businesses have different needs and it is your job to find the right tool.

Static Sites Can Be Dynamic

The last point worth mentioning is that, in fact, static sites can have dynamic content. Yes, I know I said before that they are two different kinds of applications, but bear with me for a moment.

If you opt for a Server Side Rendering strategy, your deployment process will include a building time. During this period, the framework will fetch the most up-to-date data available and construct the static page. That means that the source of data can be constantly changing!

This ends up being one of the main advantages of such a process, given that it allows the leverage of a headless CMS such as ButterCMS. Using this strategy, you can have your Gatsby application connected to ButterCMS and fetch all content from an external admin panel. The main advantage is the possibility to offload the need to constantly edit static content onto professionals better suited for such actions, like marketers and copywriters.

Every time a change is made to static content, or in defined intervals, a new build will be triggered, and once it is finished your content will be updated. The process is highly SEO-friendly and, when properly designed, will allow for content creators to carefully choose the best features for the web crawler.

ButterCMS is a leading Gatsby CMS, and you can set up the integration with your Gatsby application in minutes!

ButterCMS is the #1 rated Headless CMS

Related articles

Don’t miss a single post

Get our latest articles, stay updated!

Rafael Quintanilha has created apps for the web since 2012. As a Software Engineer, he has applied technology to solve real-world problems for clients both in Brazil and in the United States. Outside of work, Rafael is passionate about financial markets and is said to cook Brazil's finest barbecue. You can find more of his writings on his blog at rafaelquintanilha.com.